Contributors: W. Chapin, A. Roginska, B. Cook, F. Surucu, and S. Foster of AuSIM, M. Bolas of Fakespace, P. Zurek, J. Desloge, and R. Beaudoin of Sensimetrics, B. Shinn-Cunningham and N. Durlach of Boston University

Note: All projects on this page were conceived by W. Chapin, with the exception of:

| 1999 | |||

|

Multi-Modal Watch Station (MMWS) for DDX | ||

| Where | AuSIM for SPAWAR, US Navy, San Diego and NSWC, US Navy, Dahlgren, VA | ||

| What | AuSIM designed the sound and communication layer as a subcontractor to a larger team. Employed audio elements of DesignSpace to create first of a series of MVP systems. | ||

| Why | First time a tracked-headset had been commercially integrated into a military-grade geo-spatial 3D voice communication system. | ||

| Published | MMWS AuSIM Voice Systems | ||

| 2000 | |||

|

AuSIM3D | ||

| Where | Developed at AuSIM in Palo Alto and Los Altos, CA | ||

| What | AuSIM3D was the flagship product of AuSIM Inc, a software 3D audio technology spanning from client control to low-level signal processing. Originated as the cancelled R&D project "Toro" at Aureal, it was conceived as an object-oriented host-based signal-processing engine, partnered with Intel's then "Native Signal Processing" (NSP), which later became part of Intel's Multi-Media Extensions(MMX). While AuSIM3D followed W. Chapin's vision, it was not until it was challenged by Dr. S.A. Rizzi and a re-implementation by software architect Bryan Cook that AuSIM3D matured into a high-performing product. | ||

| Why | Prior to AuSIM3D, all 3D audio was computed in ASIC or DSP hardware. The development cycle for a single improvement was measured in months. AuSIM3D cut iterative improvements into seconds, while still delivering fieldable performance. | ||

| Who | AuSIM team lead by W. Chapin, B. Cook architect | ||

| Published | AuSIM3D | ||

|

AuSIM InTheMix | ||

| Where | Developed at AuSIM in Los Altos, CA, presented at Siggraph '00 Emerging Technologies in New Orleans | ||

| What | InTheMix, a shared immersive virtual bandstand exhibition publicly installed in New Orleans for 6 days. | ||

| Why | To prove the relevance of an aural-only, shared immersive environment. | ||

| Who | AuSIM team lead by W. Chapin | ||

| Published | Emerge ETech AuSIM | ||

| 2003 | |||

|

AuSIM 3DVx | ||

| Where | AuSIM / Fakespace Labs, Mountain View, CA | ||

| What | Led the concept, design, and execution of building a mobile and wearable computing system and all of its accessories. 3DVx was derivation of the MVP systems ultimately for man-wearable deployment, and was the first to leverage streaming audio over IP. | ||

| Why | 3D audio was a generic technology that could be applied in multiple directions. Voice communications is an application with obvious benefits. But the technology needed to be integrated into a package. Four years later, tracking, processing, and VOIP were integrated into a package called a "smart phone". | ||

| Who | AuSIM team lead by W. Chapin | ||

| Published | AuSIM 3DVx | ||

| 2004 | |||

|

Concept and Technology Exporation for Transparent Hearing | ||

| Where | AuSIM and Fakespace in Mountain View, Sensimetrics in Somerville, MA, and Boston Univ. | ||

| What | Transparent Hearing was a six-month research effort to establish state of the art and evaluate conceptual directions for solutions to protecting human hearing while retaining directional situational awareness. | ||

| Why | Hearing protection has historically been discarded by service and industrial workers due to their loss of vital perception of their surroundings. This research summerizes what has to happen to achieve the goal and discusses possible approaches to solution. | ||

| Who |

Funded by US Army Natick Soldier Center and US Air Force Special Operations. Contributors: W. Chapin, A. Roginska, B. Cook, F. Surucu, and S. Foster of AuSIM, M. Bolas of Fakespace, P. Zurek, J. Desloge, and R. Beaudoin of Sensimetrics, B. Shinn-Cunningham and N. Durlach of Boston University |

||

| Published | Final Report | ||

| 2005 | |||

|

3DVx-Wearable | ||

| Where | AuSIM in Mountain View and Palo Alto, Human Systems in Hamilton, ON | ||

| What | Description | ||

| Why | Relevance | ||

| Who | People | ||

| Published | Link | ||

| 2006 | |||

|

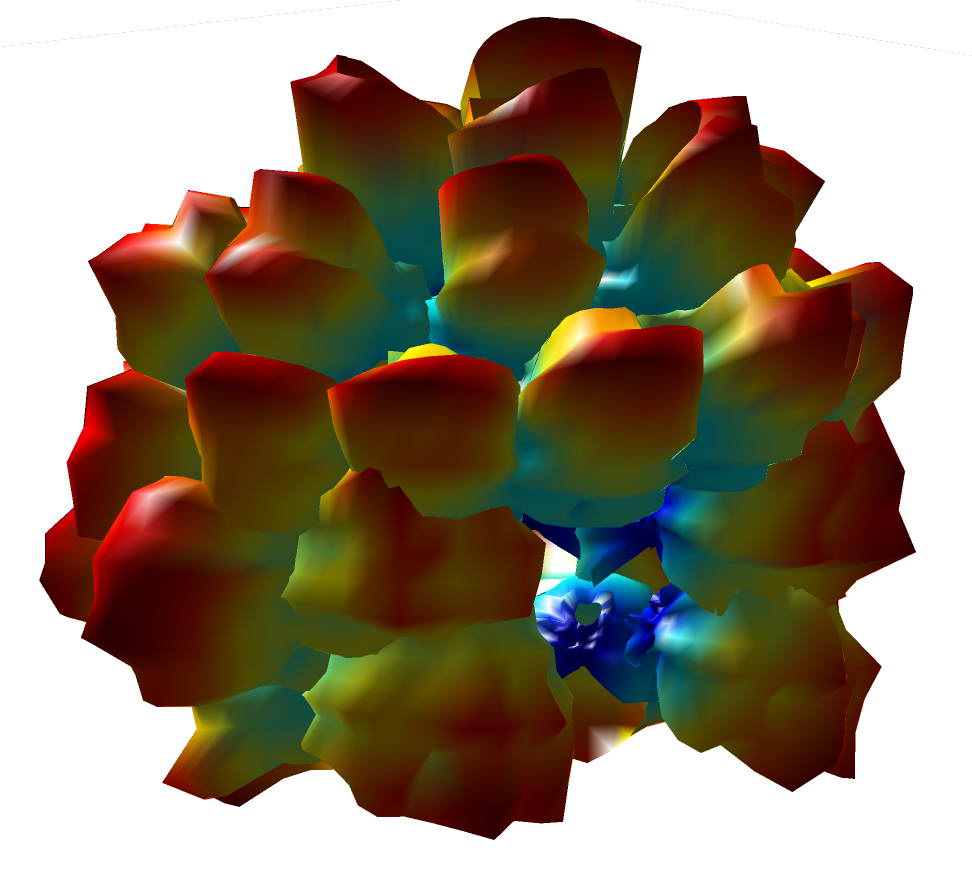

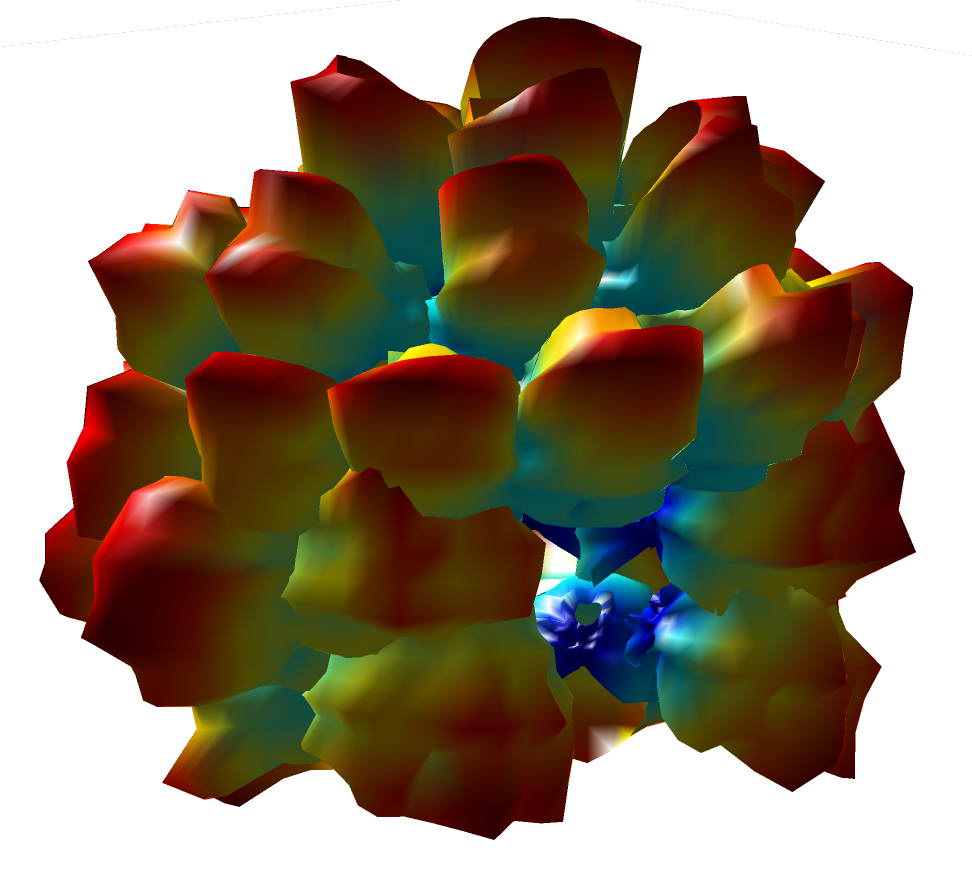

AuSIM3D Vectsonic Loudspeaker Display | ||

| Where | AuSIM at Palo Alto, CA | ||

| What | Mission-critical audio had always pointed towards head-coupled displays, but Dr. Steve Rizzi at NASA Langley convinced AuSIM that a room-sized display was necessary to convey critical auralizations. Vectsonic was the result of generalizing the propagation simulation established for two-sinks (2 ears equals binaural) to N-sinks, where each sink represents a transducer. | ||

| Why | Simplification and generalization produces a better, more versatile product. | ||

| Who | W. Chapin, S.A. Rizzi, J. Gustafsen, J. Faller | ||

| Published | AuSIM3D Vectsonic | ||

| 2008 | |||

|

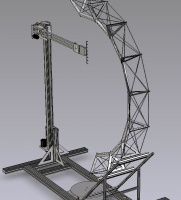

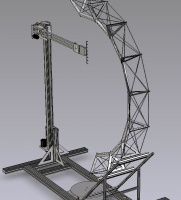

Acoustic Measurement Microphone Array and Robot (AMMAR) | ||

| Where | AuSIM at Mountain View, CA | ||

| What | McGill University Music researchers desired to study the directional radiation patterns of musicians while playing instruments. One apparatus was conceived encompassing two approaches: a 64-channel microphone planar array and a robot-arm-mounted 8-channel mic-array. A turntable capable of turning the load of a large, seated musician completed the third-axis of adjustment. | ||

| Why | Relevance | ||

| Who | G. Scavone, W. Chapin, L. Salek, A. Saroff, J. Storckman | ||

| Published | Link | ||